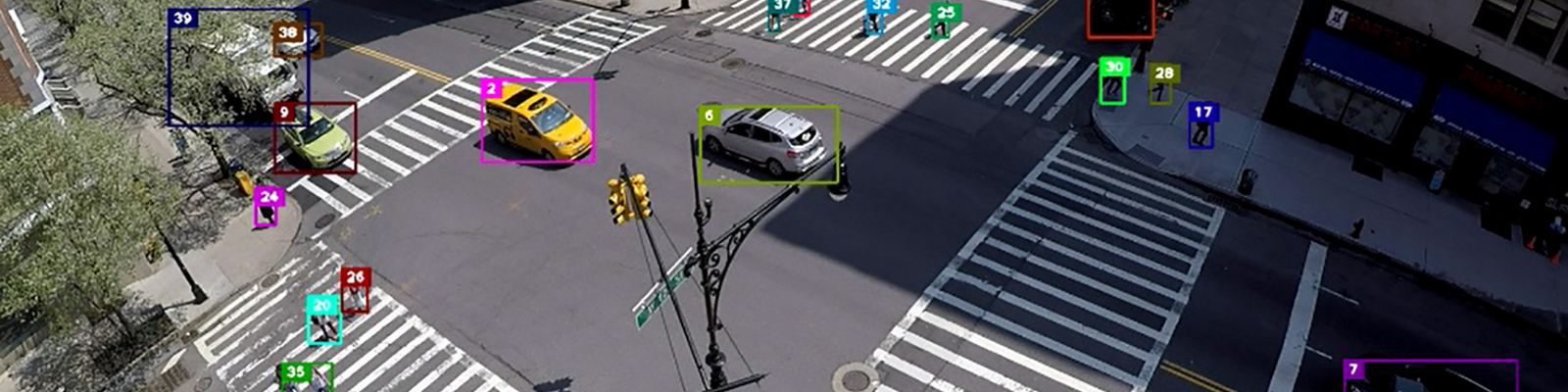

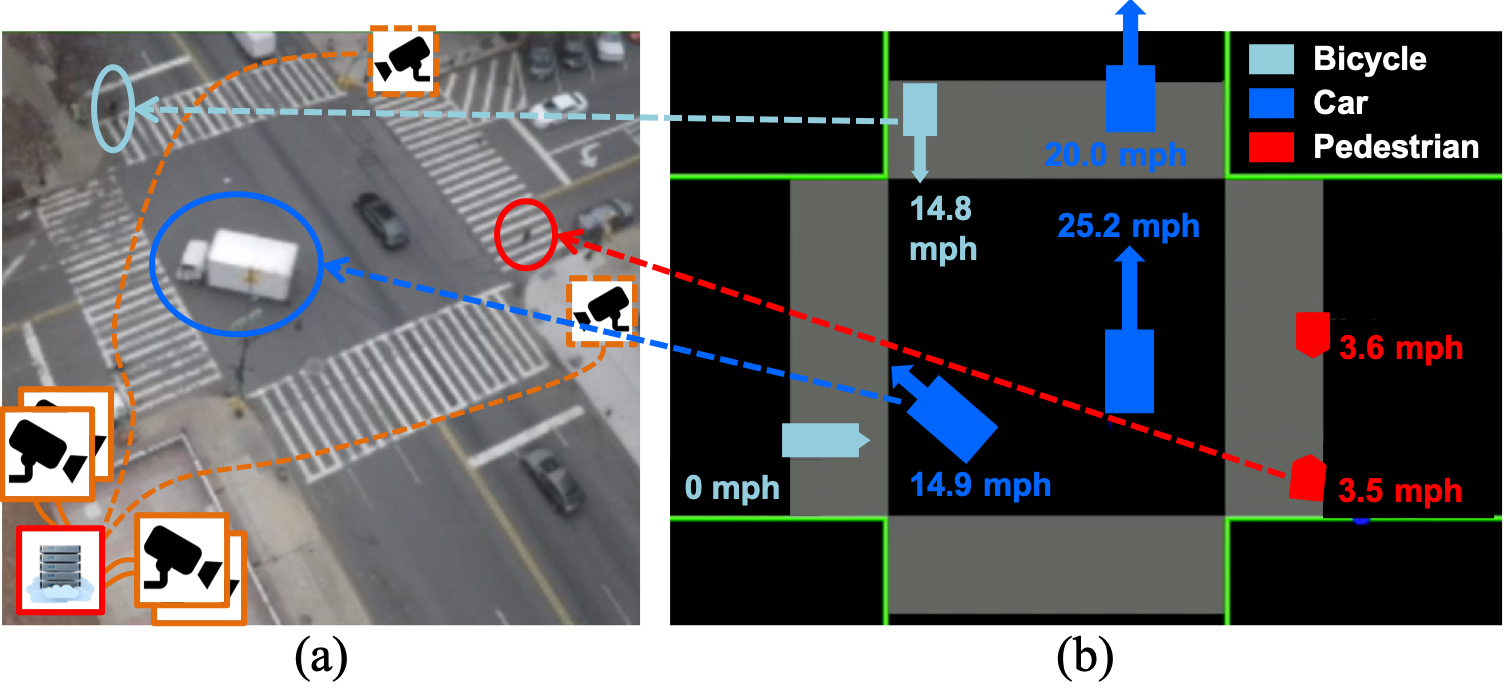

[1] Shiyun Yang, Emily Bailey, Zhengye Yang, Jonatan Ostrometzky, Gil Zussman, Ivan Seskar, Zoran Kostic, “COSMOS Smart Intersection: Edge Compute and Communications for Bird’s Eye Object Tracking,” IEEE Percom – SmartEdge 2020, 4th International Workshop on Smart Edge Computing and Networking, Mar. 2020. [download]

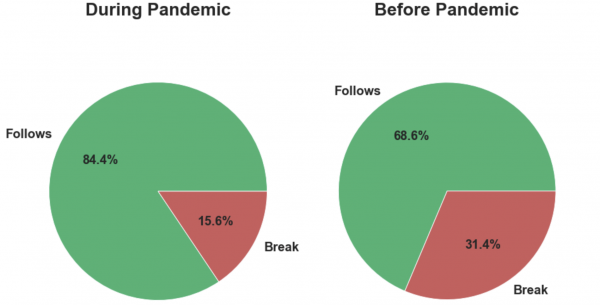

[2] M. Ghasemi, “Auto-SDA: Automated video-based social distancing analyzer,” ACM SIGMETRICS Performance Evaluation Review, vol. 49, no. 2, pp. 69–71, Sep. 2021. [download] ACM SIGMETRICS’21 SRC finalist

[3] M. Ghasemi, Z. Kostic, J. Ghaderi, and G. Zussman, “Auto-SDA: Automated Video-based Social Distancing Analyzer,” in Proc. 3rd Workshop on Hot Topics in Video Analytics and Intelligent Edges (HotEdgeVideo’21), 2021. [download] [presentation]

[4] M. Ghasemi, Z. Yang, M. Sun, H. Ye, Z. Xiong, J. Ghaderi, Z. Kostic, and G. Zussman, “Demo: Video-based social distancing evaluation in the COSMOS testbed pilot site,” in Proc. ACM MOBICOM’21, 2021. [download] [poster]

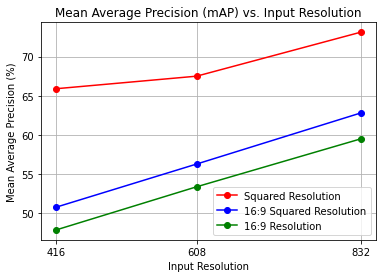

[5] Zhuoxu Duan, Zhengye Yang, Richard Samoilenko, Dwiref Snehal Oza, Ashvin Jagadeesan, Mingfei Sun, Hongzhe Ye, Zihao Xiong, Gil Zussman, Zoran Kostic,” Smart City Traffic Intersection: Impact of Video Quality and Scene Complexity on Precision and Inference,” in Proc. 19th IEEE International Conference on Smart City, Dec. 2021. [download]

[6] Z. Yang, M. Sun, H. Ye, Z. Xiong, G. Zussman, and Z. Kostic, “Birds eye view social distancing analysis system,” arXiv:2112.07159 [cs.CV], Dec. 2021. [download]

[7] Z. Yang, M. Sun, H. Ye, Z. Xiong, G. Zussman, and Z. Kostic, “Bird’s-eye View Social Distancing Analysis System,” in Proc. IEEE ICC 2022 Workshop on Edge Learning for 5G Mobile Networks and Beyond, May 2022. [download]

[8] Z. Kostic, A. Angus, Z. Yang, Z. Duan, I. Seskar, G. Zussman, and D. Raychaudhuri, “Smart city intersections: Intelligence nodes for future metropolises,” arXiv:2205.01686v2 [cs.CV], May 2022. [

download]

[9] M. Ghasemi, S. Kleisarchaki, T. Calmant, L. Gürgen, J. Ghaderi, Z. Kostic, and G. Zussman, “Demo: Real-time camera analytics for enhancing traffic intersection safety,” in

Proc. ACM MobiSys’22, June 2022.

[

download]

[10] A. Angus, Z. Duan, G. Zussman, and Z. Kostic, “Real-Time Video Anonymization in Smart City Intersections,” in

Proc. IEEE MASS’22 (invited), Oct. 2022. [

download] [

presentation] [

video] [

dataset]

[11] Z. Kostic, A. Angus, Z. Yang, Z. Duan, I. Seskar, G. Zussman, and D. Raychaudhuri, “Smart city intersections: Intelligence nodes for future metropolises,”

IEEE Computer, Special Issue on Smart and Circular Cities, Dec. 2022. [

download]

[12] M. Ghasemi, S. Kleisarchaki, T. Calmant, J. Lu, S. Ojha, Z. Kostic, L. Gürgen, G. Zussman, and J. Ghaderi, “Demo: Real-time Multi-Camera Analytics for Traffic Information Extraction and Visualization,” in

Proc. IEEE PerCom’23, Mar. 2023 [

download]

[13] M. Ghasemi, Z. Yang, M. Sun, H. Ye, Z. Xiong, J. Ghaderi, Z. Kostic, and G. Zussman, “Video-based social distancing: evaluation in the COSMOS testbed,”

IEEE Internet of Things Journal (to appear), 2023. [

download]